MetaKube

SysEleven MetaKube is the managed Kubernetes service from SysEleven - a Berlin based managed hosting company.

MetaKube is a Certified Hosted Kubernetes Platform, which ensures that the Kubernetes clusters are fully standard compliant. The conformance test results are publicly available for all supported versions in the CNCF k8s-conformance Github repository.

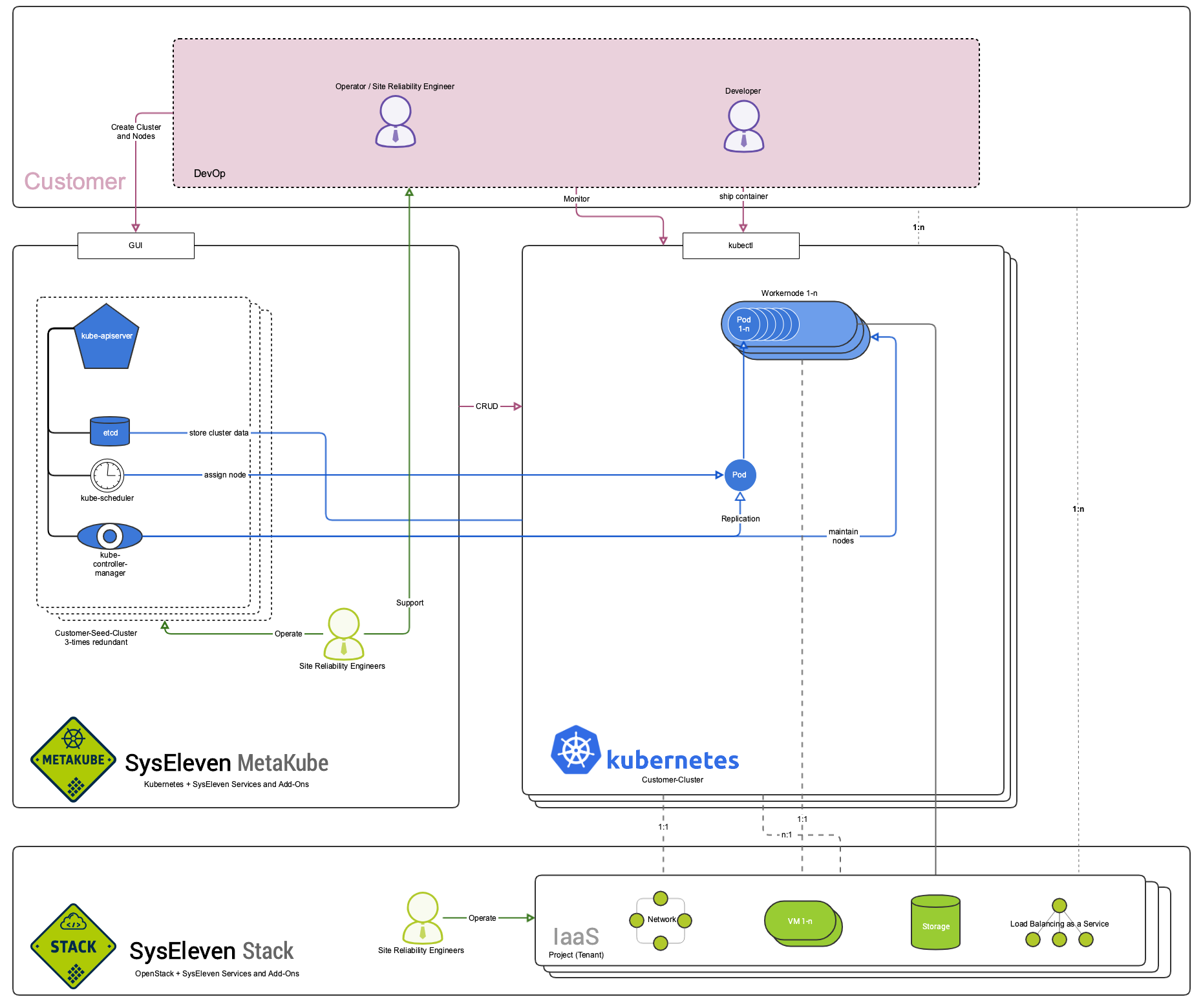

Architectural Overview

The following chart will give you a rough overview of what it means to have Kubernetes managed by us. You will not have to struggle setting up the cluster yourself or thinking about security and scalability of the control plane components - we already did that for you. Just spin up your cluster in our web interface and enjoy high performance Kubernetes clusters on the SysEleven Stack.