Horizontal Node Autoscaler

MetaKube Kubernetes clusters support horizontal node autoscaling out of the box.

This document describes the functionality and features related to autoscaling.

If you want to install and test the horizontal node autoscaler in your own cluster, we recommend also reading the node autoscaler tutorial.

Activating the Node Autoscaler

The horizontal node autoscaler is run in SysEleven managed infrastructure together with the rest of control plane components.

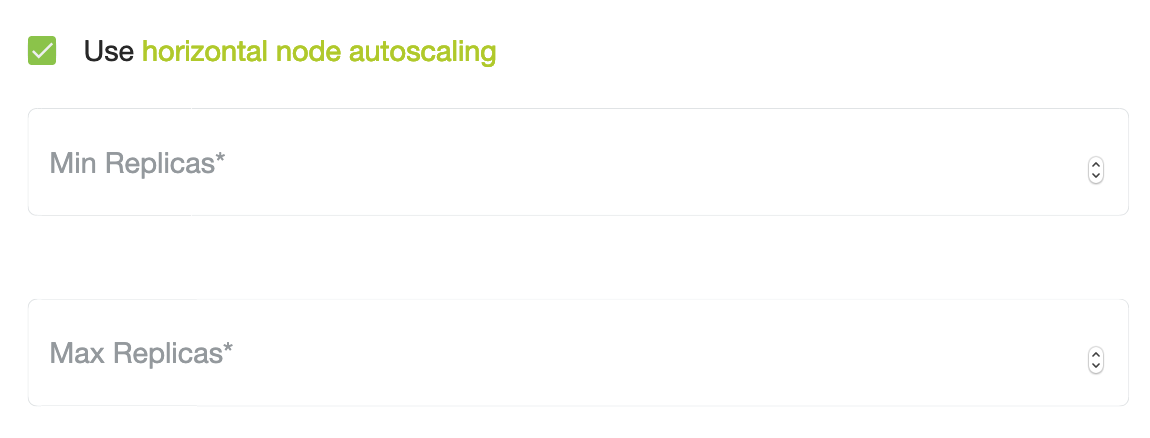

It can be activated by setting the minimum and maximum number of replicas for a node deployment.

One way to achieve this is the MetaKube dashboard:

Another way is to configure autoscaling nodes is using terraform-provider-metakube.

It is further possible to add the labels for the node autoscaler manually to the machinedeployment resource using kubectl:

...

annotations:

cluster.k8s.io/cluster-api-autoscaler-node-group-min-size: "1"

cluster.k8s.io/cluster-api-autoscaler-node-group-max-size: "15"

...For more information please have a look at the documentation for the cluster-autoscaler.

Scaling up

The horizontal node autoscaler will try to add nodes to a node deployment if all the following conditions are met:

- The minimum and maximum number of replicas are specified (see annotations above)

- The current number of replicas is lower than the maximum number of replicas

- Pods can not be scheduled due to limited resources

When all conditions are met, the autoscaler calculates the required nodes to schedule all pending pods

and will create the respective number of nodes in a single scaling step.

It can happen that pods are temporarily in a pending state, even when the number of nodes is technically sufficient to schedule all pods.

In such situations it is possible that the autoscaler adds one extra node temporarily.

Scaling down

The autoscaler will reduce the number of nodes in a machine deployment if all the following conditions are met:

- The minimum and maximum number of replicas are specified (see annotations above)

- The current number of replicas is higher than the specified minimum number of replicas

- The last scale-up hasn't happened within the last 10 minutes

- All pods can be scheduled on fewer nodes.

When all the above conditions are met, the autoscaler will calculate the number of spare nodes.

Those nodes will be drained and removed from the node deployment.

The autoscaler will not drain and remove nodes running certain pods from the kube-system namespace or pods using local storage.

Therefore, it can happen, that the autoscaler will not scale down to the minimum number of replicas,

even in cases where pods could theoretically be scheduled on fewer nodes.

Since the horizontal node autoscaler runs in the cluster itself, it requires at least one node in the cluster. It is therefore not possible to scale the cluster down to zero nodes.

The autoscaler does not consider if nodes are running pods that use local volumes when scaling down.

While a PodDisruptionBudget can prevent a node drain, it's definitely recommended to not use autoscaling on node deployments where local storage is used.

Example configuration

For a full tutorial & troubleshooting, check out Use Horizontal Node Autoscaling